Touching base with Sonarqube and cognitive complexity again. A must-have companion for our development effort.

I was reviewing a pull request today and saw a new function. My first thought was "unnecessary cognitive complexity". So I decided to challenge that thought and see if it was true and write it down here to share with anyone who may be interested.

Current state

My first problem was to find a service that calculated the score for me. For some reason, I can't get my Sonarqube integration to show that to me directly in Visual Studio code (if anyone knows how to do that, please share).

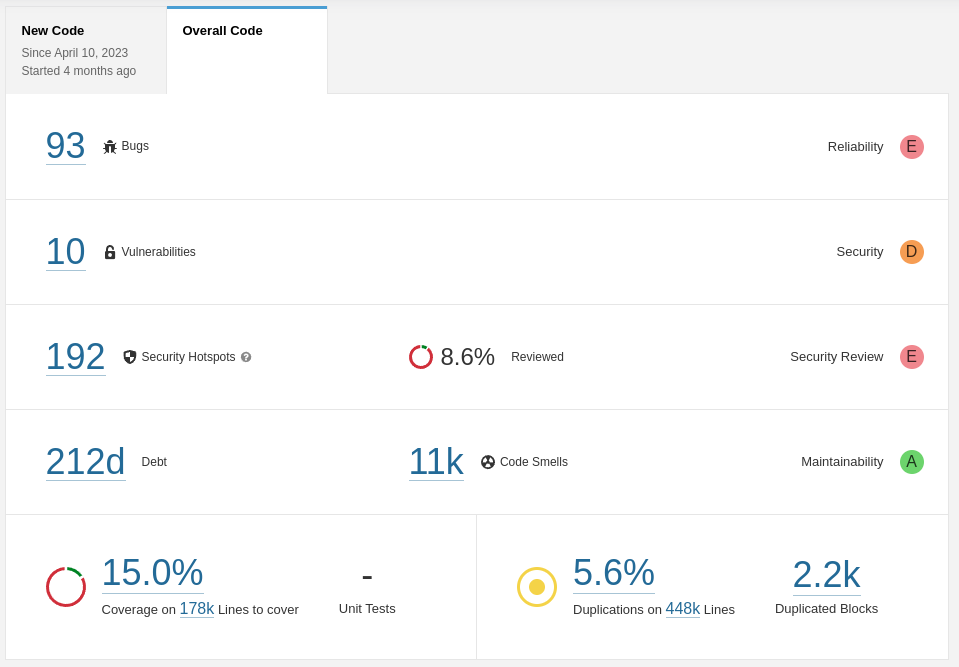

I was convinced that Sonarqube would give the score in the web UI so my first step was to update the project analysis with the new code. First I connected to our Sonarqube service to see what we have. I find that we have only pushed one analysis on the 10th of April. Very sad...

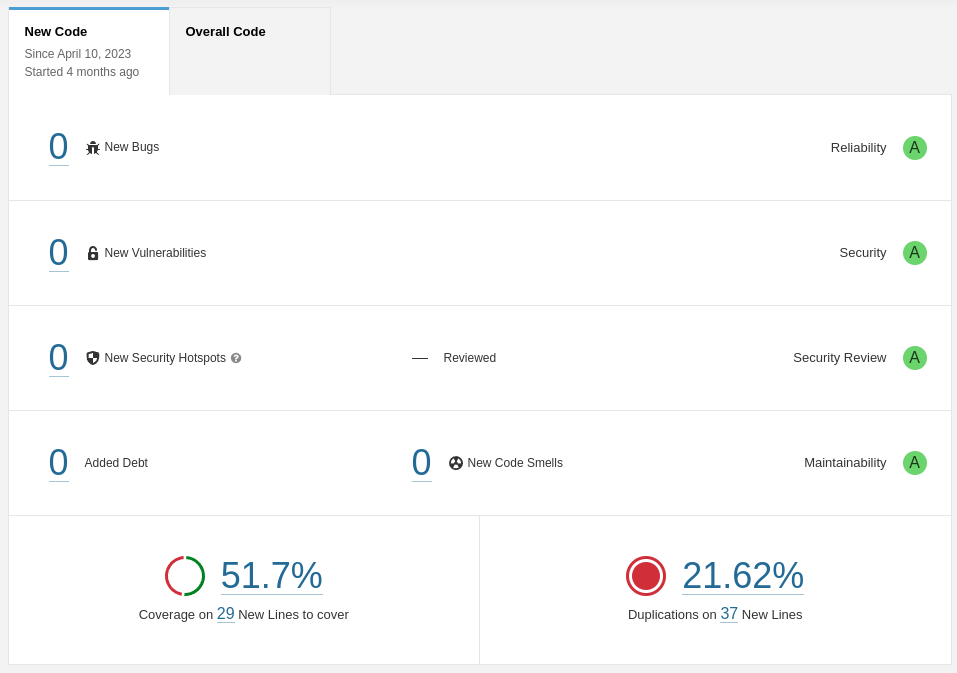

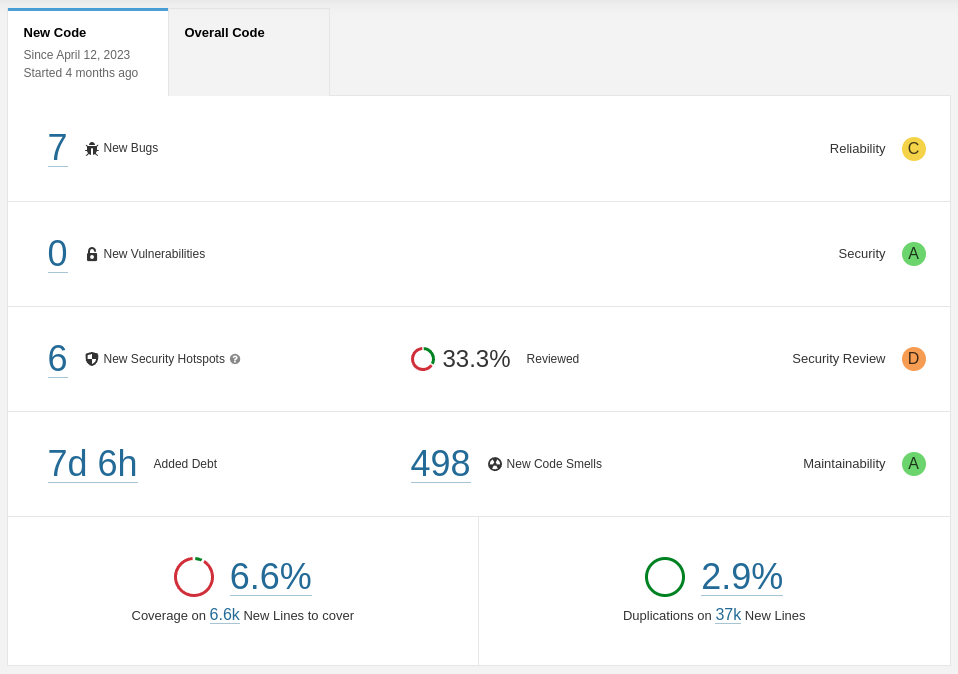

So I ran a new analysis with the updated code and got these numbers:

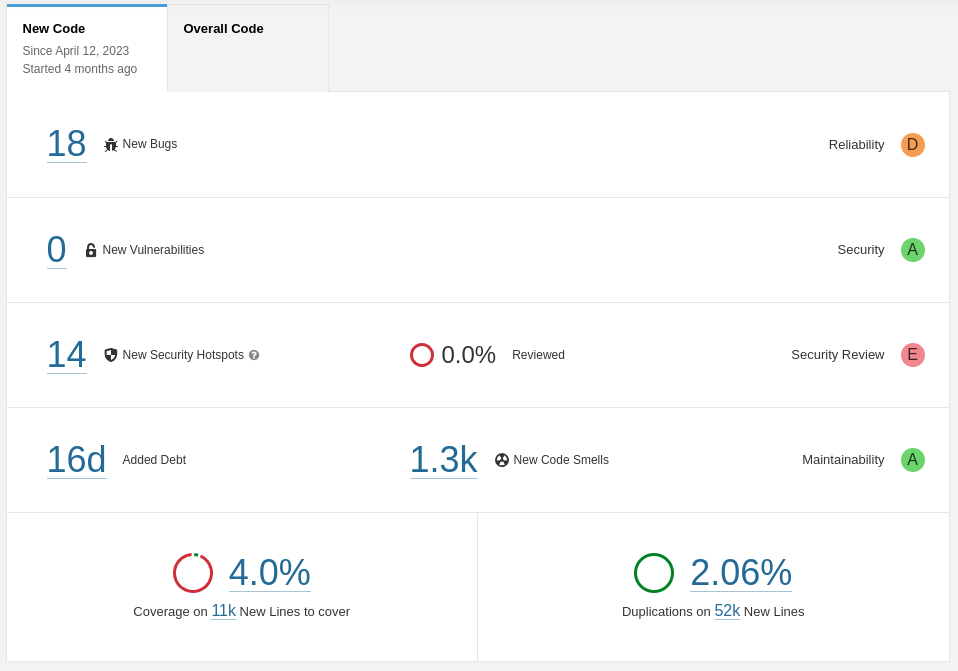

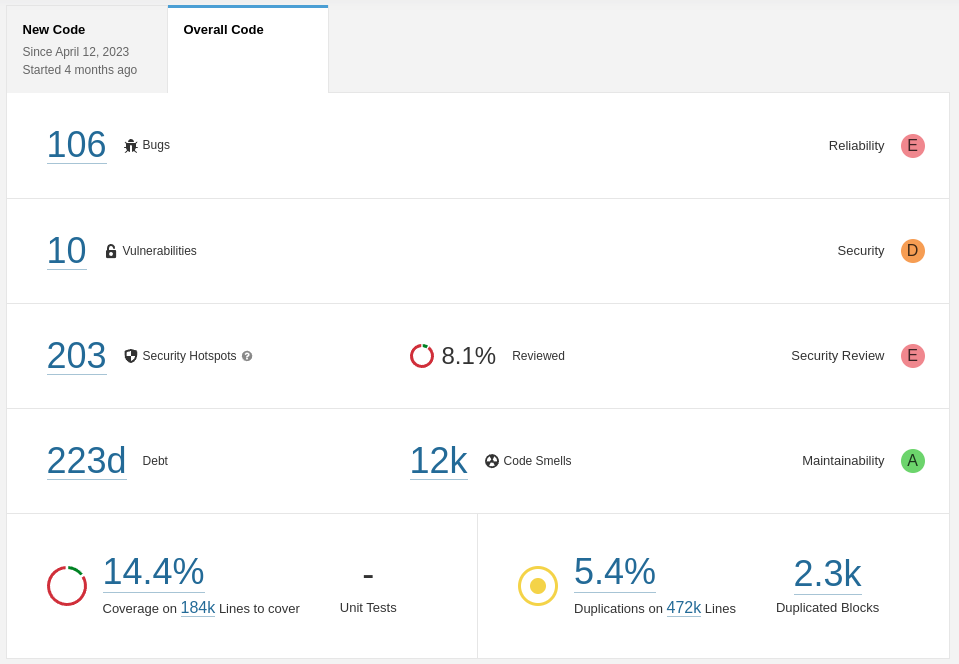

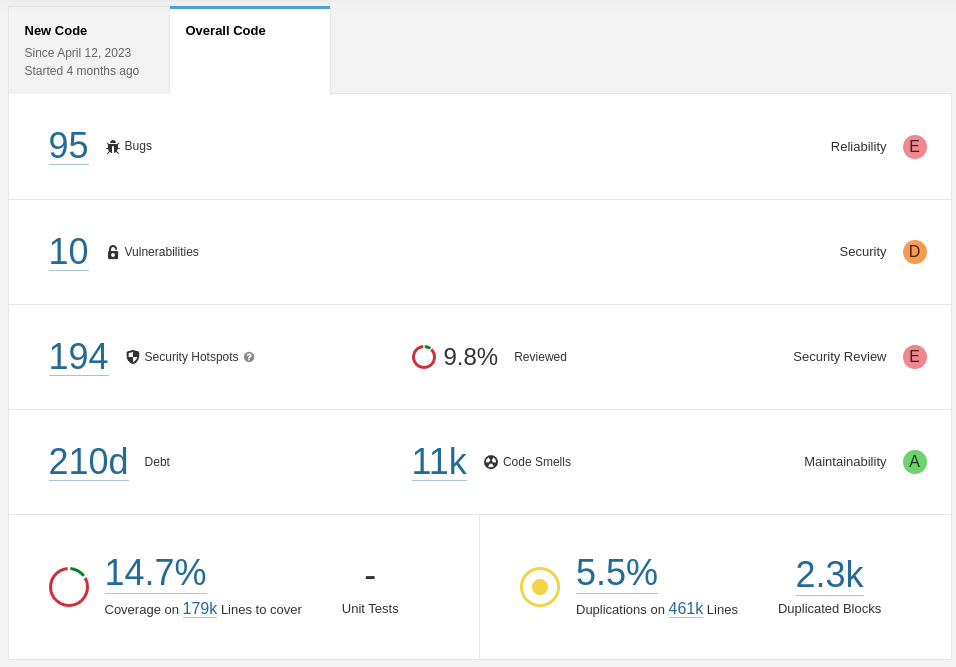

I dedicated some time to analyzing the results, correcting some errors (there was a PHP file with incorrect syntax!!), and excluding some new libraries that we have added and that are not under our control. After the first cleanup, I get these final numbers for the start of September 2023

So, in the new changes, we added 7 bugs, 6 security issues, and 498 code smells. In the overall comparison, we added two bugs, two security issues, and added 2 days of technical debt. All in all not bad given the amount of code we generate every month.

Code coverage has gone down, which is normal as we add more code than tests and the duplication code indicator has also gone up.

Not bad...

Cognitive complexity

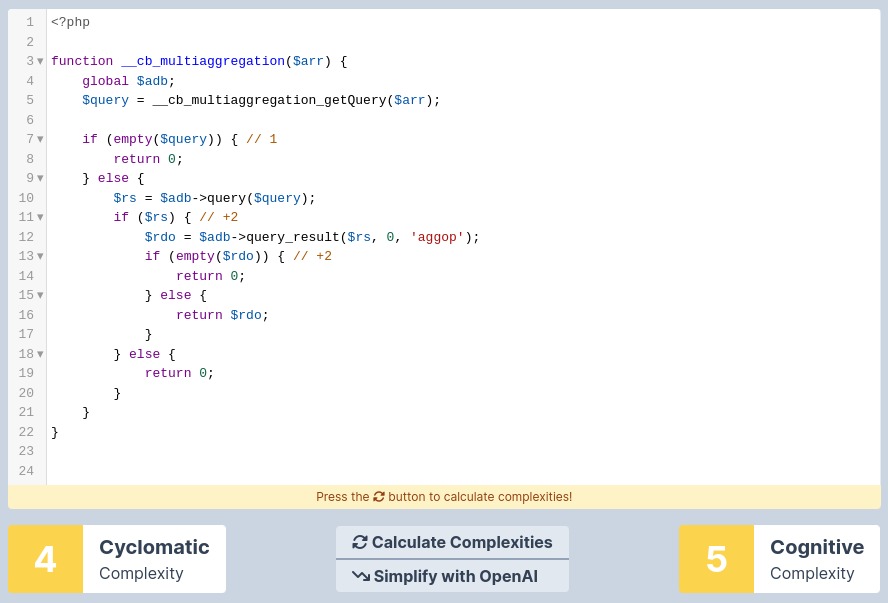

Now that I have an up-to-date analysis I navigate to the function I wanted to analyze but it turns out that the cognitive complexity score of the function is nowhere to be found. Sonarqube only informs about those functions that go over the minimum score. So I looked around and found this nice tool that calculates the score for me, albeit it doesn't show me where the complexities are (I couldn't find a tool that did that).

This is the function in its original form with a complexity of 4 and 5. I manually added some comments to see the score increment for the cognitive calculation.

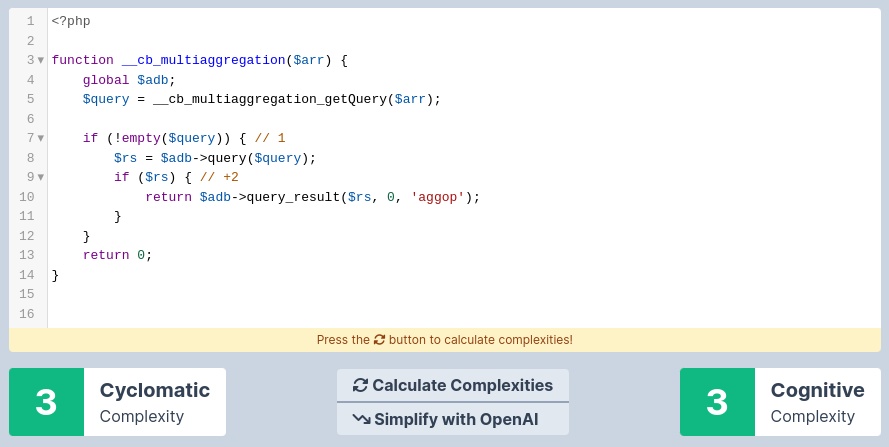

My intuition was to eliminate the redundant return 0 so I modified the function like this:

So my initial thought was correct. We went from reading

get a query, if it is empty return 0, if not launch it, if it works, get the first value, if it is empty return 0, else return the value, if the query failed, return 0

to reading

get a query, if it is not empty, launch it, if it works return what you get, else return 0

the difference in mental effort is clear. At the end of a long day reading code, it is REALLY clear.

Conclusion

Note that this particular case is rather useless, on one side, both functions are not equivalent as they don't return the same values in all cases (exercise for those of you who are curious), and on the other, a cognitive complexity of 5 is very good and there is no need to dedicate time to making it smaller. We should be dedicating our effort to big functions with very high scores which we have a lot of in EvolutioFW. I am just trying to make a point and reinforce the message of how important it is to be using Sonarqube in your daily work. If you don't have an account or you don't have it integrated into your IDE yet, you should do that as soon as possible (sharpen the saw > dedicate time to your tools!). High priority.

HTH